Publication date:

2020/10/30

Estimating the 6D position of objects from an image is one of the key tasks for autonomous systems equipped with visual sensors. With a precisely estimated position, the robot can then grab the object and further manipulate it. As an example, you can imagine a home robotic helper that can automatically fill a dishwasher or a production robot that manipulates items on the production line based on visual inputs.

The paper “CosyPose: Consistent multi-view multi-object 6D pose estimation” by co-authors Yann Labbé (Inria), Justin Carpentier (Inria), Mathieu Aubry (ENPC) and Josef Šivice (CIIRC CTU) won five awards in the competition for 6D object positioning on ECCV 2020 conference.

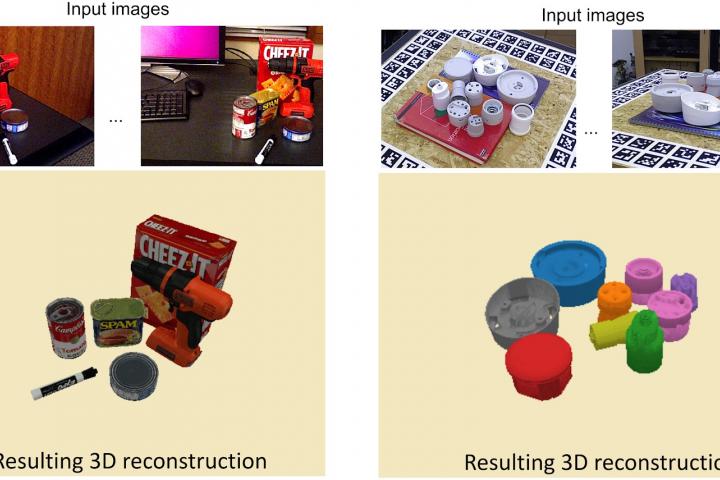

The newly developed CosyPose method estimates the 6D position of multiple objects in a scene captured by one or more input images. The main innovation is the connection of deep neural networks with methods of estimating geometric transformations from multiple images.